- Topic

38k Popularity

19k Popularity

45k Popularity

17k Popularity

43k Popularity

19k Popularity

7k Popularity

4k Popularity

97k Popularity

29k Popularity

- Pin

- 🎊 ETH Deposit & Trading Carnival Kicks Off!

Join the Trading Volume & Net Deposit Leaderboards to win from a 20 ETH prize pool

🚀 Climb the ranks and claim your ETH reward: https://www.gate.com/campaigns/site/200

💥 Tiered Prize Pool – Higher total volume unlocks bigger rewards

Learn more: https://www.gate.com/announcements/article/46166

- 📢 ETH Heading for $4800? Have Your Say! Show Off on Gate Square & Win 0.1 ETH!

The next bull market prophet could be you! Want your insights to hit the Square trending list and earn ETH rewards? Now’s your chance!

💰 0.1 ETH to be shared between 5 top Square posts + 5 top X (Twitter) posts by views!

🎮 How to Join – Zero Barriers, ETH Up for Grabs!

1.Join the Hot Topic Debate!

Post in Gate Square or under ETH chart with #ETH Hits 4800# and #ETH# . Share your thoughts on:

Can ETH break $4800?

Why are you bullish on ETH?

What's your ETH holding strategy?

Will ETH lead the next bull run?

Or any o

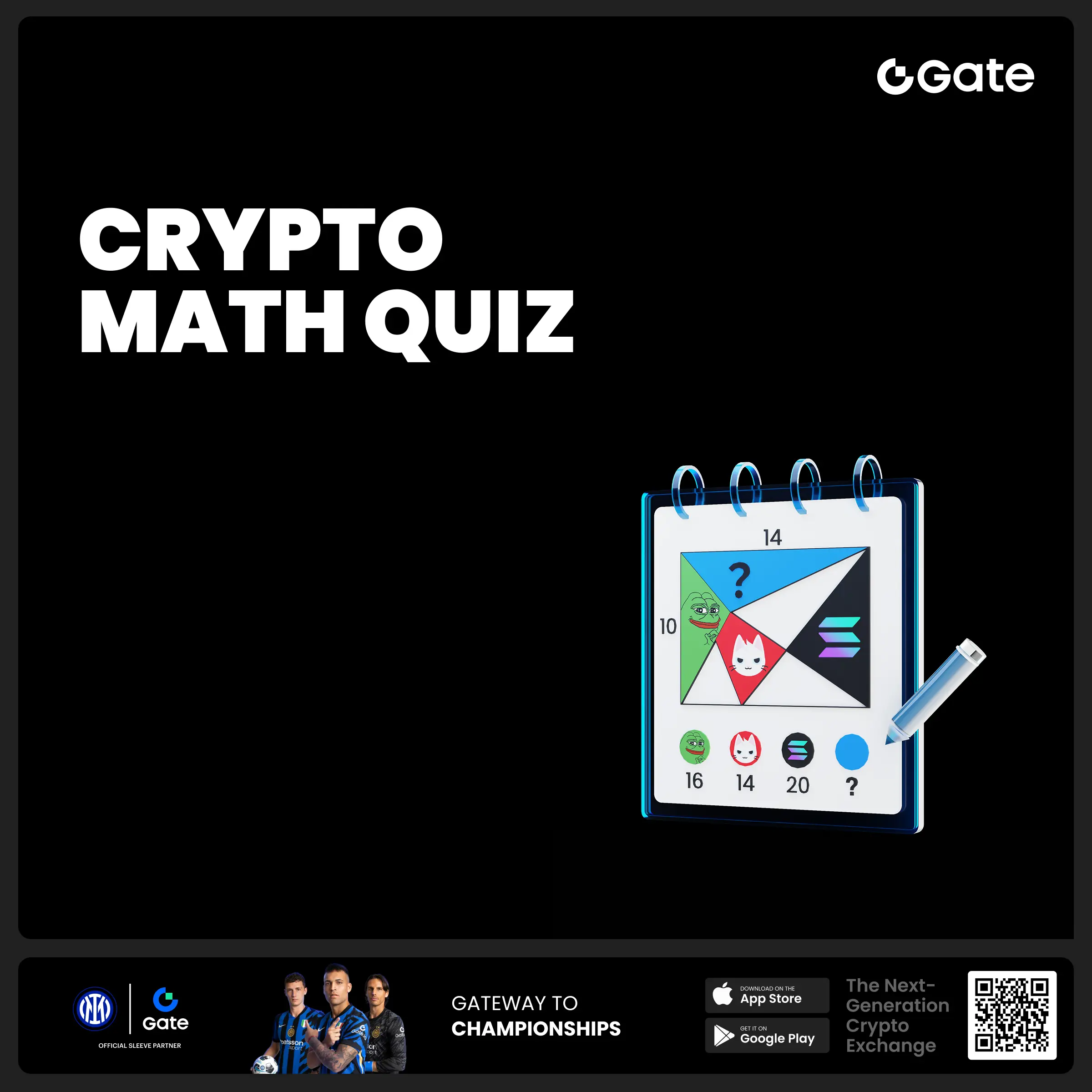

- 🧠 #GateGiveaway# - Crypto Math Challenge!

💰 $10 Futures Voucher * 4 winners

To join:

1️⃣ Follow Gate_Square

2️⃣ Like this post

3️⃣ Drop your answer in the comments

📅 Ends at 4:00 AM July 22 (UTC)

- 🎉 #Gate Alpha 3rd Points Carnival & ES Launchpool# Joint Promotion Task is Now Live!

Total Prize Pool: 1,250 $ES

This campaign aims to promote the Eclipse ($ES) Launchpool and Alpha Phase 11: $ES Special Event.

📄 For details, please refer to:

Launchpool Announcement: https://www.gate.com/zh/announcements/article/46134

Alpha Phase 11 Announcement: https://www.gate.com/zh/announcements/article/46137

🧩 [Task Details]

Create content around the Launchpool and Alpha Phase 11 campaign and include a screenshot of your participation.

📸 [How to Participate]

1️⃣ Post with the hashtag #Gate Alpha 3rd - 🚨 Gate Alpha Ambassador Recruitment is Now Open!

📣 We’re looking for passionate Web3 creators and community promoters

🚀 Join us as a Gate Alpha Ambassador to help build our brand and promote high-potential early-stage on-chain assets

🎁 Earn up to 100U per task

💰 Top contributors can earn up to 1000U per month

🛠 Flexible collaboration with full support

Apply now 👉 https://www.gate.com/questionnaire/6888

The founder of OpenAI and the chief scientist are rarely on the same stage: the most exciting thing in AI is happening!

Author: Zhu Xueying

As ChatGPT's monthly activity soared to the 1 billion milestone, OpenAI founder Sam Altman's "global tour" also attracted attention.

This week, SamAltman came to Tel Aviv University in Israel and conducted a vivid and in-depth interview with Ilya Sutskavel, the chief scientist of OpenAI who grew up in Israel (the two rarely shared the same stage). Sam was momentarily at a loss for words when asked, and it was worth listening to.

The whole interview is not lacking in depth, but also interesting.

For example, Ilya happily said that his parents told him that his friends also use ChatGPT on a daily basis, which surprised him very much. And when the audience asked "You are making history, how do you want history to remember you?", Ilya also replied wittily: ** "I mean, in the best way."**

The following is the full text of the interview:

A Ph.D. and a dropout: two different lives before OpenAI

Ilya:

From the age of 5 to 16, I lived in Jerusalem. Between 2000 and 2002 I studied at the Open University. I then moved to the University of Toronto, where I spent 10 years and earned BA, MA and PhD degrees. During my graduate studies, I had the privilege of contributing to important advances in deep learning. Then I co-founded a company with some people that was acquired by Google, where I worked for a while.

Then, one day I got an email from Sam who said, "Hey, let's hang out with some cool people." I was intrigued, so I went for it. That was the first time I met Elon Musk and Greg Brockman, and we decided to start the OpenAI journey. We've been doing this for many years, so this is where we are currently.

Himself:

As a kid, I was very excited about AI and was a sci-fi nerd. It never occurred to me to have the opportunity to study it, but then in college, I started working on it for a while. But then it hadn't really taken off, it was like 2004... I dropped out of school and did a startup.

After a while after the progress Ilya mentioned, I got really excited about what was going on with AI and sent him an email, and we're still going.

The advantages of OpenAI

host:

What do you see as the key strengths of OpenAI, especially as competitors are often larger and have more resources, making it a leader in generative AI?

Himself:

We believe a key advantage is our increased focus on what we do. It's important and misunderstood that we have a higher talent density than larger companies. **Our culture values rigor and repeatable innovation, and it is difficult and rare to have these two cultures coexist. **

Ilya:

Yes, I can only add a small amount to Sam's answer. **This is a game of faith, more faith means more progress. **You will make the most progress if you have a lot of faith. It might sound like a joke, but it's actually true. You have to believe in the idea and push it hard, that's what drives progress.

The position of academia in the field of AI

host:

Recent advances in artificial intelligence have been largely driven by industry, what role do you think academic research should play in the development of the field?

Ilya:

The role of academia in the field of AI has changed significantly. Academia used to be at the forefront of AI research, but that has changed. There are two reasons: computing power and engineering culture. Academia has less computing power and often lacks an engineering culture.

However, academia can still make significant and important contributions to AI. **Academia can unravel many mysteries from the neural networks we are training and we are creating complex and amazing objects. **

What is deep learning? It is an alchemical process where we use data as the raw material, combined with computational energy, to obtain this intelligence. But what exactly is it? How does it work? What properties does it have? How do we control it? How to understand it? How to apply it? How to measure it? These questions are all unknowns.

**Even on simple measurement tasks, we cannot accurately assess the performance of our AI. This wasn't a problem in the past because AI wasn't all that important. Artificial intelligence is very important now, but we realize that we still can't fully measure it. **

So, I started thinking about some problems that can't be solved by anyone. You don't need huge computing clusters, or huge engineering teams, to ask these questions and make progress. If you do break through, it will be a compelling and meaningful contribution that everyone will pay attention to right away.

host:

We want to see progress that is completely balanced between industry and academia, and we want to see more of these types of contributions. Do you think there is anything that can be done to improve the situation, especially from your position, is there some kind of support available?

Ilya:

First of all, I think the change of mindset is the first and most important thing. I'm a little bit out of academia these days, but I think there's some crisis in what we're doing.

There is too much momentum around a large number of papers, but it is important to focus on solving the most critical problems. We need to change our mindset and focus on the most important issues. **We can't just focus on what we already know, but be aware of what's wrong. **Once we understand the problems, we can move towards solving them.

Plus, we can help. For example, we have an Academic Access Program where academics can apply to gain computing capacity and access to our state-of-the-art models. Many universities have written papers using GPT-3, studying the properties and biases of the models. If you have more ideas, I'd love to hear them.

open source OR not open source

host:

While some players really promote open source release of their models and code, others do not do enough. This is also about open AI. So I'm wondering, first of all, what's your take on this? If you agree, why do you think OpenAI is the right strategy?

Himself:

**We have open-sourced some models and plan to open-source more over time. But I don't think open sourcing everything is the right strategy. **If today's models are interesting, they may be useful for something, but they are still relatively primitive compared to what we are about to create. I think most people would agree with that. If we know how to make a super powerful AGI that has many advantages but also disadvantages, open source might not be the best option.

**So we're trying to find a balance. **We will open source some things, and as our understanding of the model improves, we will be able to open source more over time. We've released a lot of stuff, and I think many of the key ideas that others are building language models right now come from OpenAI's releases, such as the early GPT papers and scaling laws from rohf's work. But it's a balance we'll have to figure out as we go forward. We face many different stressors and need to manage them successfully.

host:

So are you considering making the model available to a specific audience, rather than open sourcing it to the world? What are you thinking about as a scientist, or as we finish training GPT-4?

Himself:

It took us almost eight months to understand it, secure it, and figure out how to adjust. We have external auditors, red teams, and the scientific community involved. So we are taking these steps and will continue to do so.

Risks that AI cannot ignore

host:

I do think risk is a very important issue and there are probably at least three categories of risk.

The first is economic dislocation, in which jobs become redundant. The second category may be situations where powerful weapons are in the hands of a few. For example, if hackers were able to use these tools, they could do things that would have taken thousands of hackers before. The last category is probably the most worrisome, where the system is out of control and even the trigger cannot prevent its behavior. I'd like to know your thoughts on what each of these scenarios might be.

Ilya:

OK, let's start with the possible scenarios for economic chaos. As you mentioned, there are three risks in **: jobs being compromised, hackers gaining superintelligence, and systems getting out of control. **Economic chaos is indeed a situation we are already familiar with, as some jobs are already affected or at risk.

In other words, certain tasks can be automated. For example, if you are a programmer, Copilot can write functions for you. Although this is different from the case of artists, since many economic activities of artists have been replaced by some image generators.

I don't think this is really a simple question. **Despite the creation of new jobs, economic uncertainty will persist for a long time. I'm not sure if this is the case. **

In any case, however, we need something to smooth the transition to the onslaught of emerging careers, even if those careers don't exist yet. This requires the attention of the government and the social system.

Now let's talk about hacking. Yes, this is a tricky question. AI is really powerful, and bad guys can use it in powerful ways. We need to apply a framework similar to other very powerful and dangerous tools.

Note that we are not talking about artificial intelligence today, but the increasing capabilities over time. Right now we are at a low point, but when we get there it will be very strong. **This technology can be used in amazing applications, it can be used to cure diseases, but it can also create diseases worse than anything that came before.

Therefore, we need structures in place to control the use of this technology. Sam, for example, has submitted a document to them proposing an IAEA-like framework for controlling nuclear energy to control very powerful artificial intelligence. **

host:

The last question is about superintelligent artificial intelligence getting out of control. It can be said that it will become a huge problem. Is it a mistake to build a superintelligent artificial intelligence that we don't know how to control.

Himself:

I can add some points. Of course, I totally agree with the last sentence.

On the economic front, I find it difficult to predict future developments. I think it's because there's so much excess demand in the world right now, and these systems are really good at helping get things done. But in most cases today, not all tasks can be done by them.

**I think in the short term things are looking good and we're going to see a significant increase in productivity. **If we could make programmers twice as productive, the amount of code needed in the world would more than triple, so everything looks fine.

In the long run, I think these systems will handle increasingly complex tasks and categories of work. Some of these jobs may disappear,** but others will become more like jobs that really require humans and human relationships. **People really want humans to play in these roles.

These roles may not be obvious. For example, when Deep Blue defeated Garry Kasparov, the world witnessed artificial intelligence for the first time. At the time everyone said chess was over and no one would play chess anymore because it didn't make sense.

However, we have a consensus that chess has never been more popular. Humans are stronger, it's just that the expectations are raised. We can use these tools to improve our own skills, but people still really enjoy playing chess, and people still seem to care about other people.

You mentioned that Dolly can create great art, but people still care about the people behind the art they want to buy, and we all think those creators are special and valuable.

Take chess as an example, just as people pay more attention to human chess, more people watch human chess than ever before. However, few people want to watch a match between two AIs. So I think they're going to be all these unpredictable factors. **I think humans crave differentiation (between humans and machines). **

The need to create something new to gain status will always be there, but it will manifest itself in a really different way. I bet that jobs 100 years from now will be completely different than jobs are today, and many of those things will be very similar. But I really agree with what Ilya said, **Whatever happens, we need a different kind of socioeconomic contract, because automation has reached hitherto unimaginable heights. **

host:

Sam, you recently signed a petition calling for the existential threat of artificial intelligence to be taken seriously. Perhaps companies like OpenAI should take steps to address this problem.

Himself:

I really want to stress that we're not talking about today's systems here, or small startups training models, or open source communities.

I think it would be a mistake to impose heavy regulation on the field right now, or try to slow down incredible innovation. Really don't want to create an inconsistent superintelligence that seems indisputable. **I think the world should see this not as a sci-fi risk that will never come, but as something we might have to deal with in the next decade, it will take time to get used to some things, but it won't be long . **

So we came up with an idea and hopefully a better one. If we can build a global organization, with the highest level of computing power and technology frontier, a framework can be developed to license models and audit their security to ensure that they pass the necessary tests. This will help us to view this issue as a very serious risk. We do do something similar with nuclear energy.

In the future, will AI accelerate scientific discovery? Cure disease, solve climate problems?

host:

Let's talk about advantages. In this scientific environment that we are in, I wonder about the role of artificial intelligence. In a few years, maybe in the future, we will have something scientific to discover.

Himself:

**This is the thing I'm personally most excited about about AI. **I think there's a lot of exciting things going on, huge economic benefits, huge healthcare benefits. But in fact, artificial intelligence can help us make some scientific discoveries that are currently impossible. We would love to learn about the mysteries of the universe and even more. I truly believe that scientific and technological progress is the only sustainable way to make life better and the world a better place.

If we can develop a lot of new scientific and technological advances, I think we've already seen the beginnings of people. Use these tools to increase efficiency. But if you imagine a world where you can say, "Hey, I can help cure all diseases," and it can help you cure all diseases, that world might be a better place. I don't think we're that far away from that.

host:

Aside from diseases, another major problem is climate change, which is very tricky to solve. But I don't think it will be particularly difficult to deal with climate change once we have really powerful superintelligent systems.

Ilya:

Yes, you need a lot of carbon capture. You need the energy for carbon capture, and you need the technology to build it. If you can accelerate science, you need to build a lot. Progress is what powerful artificial intelligence can do, and we can achieve very advanced carbon capture much faster. It can get to very cheap electricity faster, we can get to cheaper manufacturing faster. Now combine all three of cheap electricity, cheap manufacturing, and advanced carbon capture, and now you build a lot of them, and now you suck all this excess CO2 out of the atmosphere.

**If you have a strong artificial intelligence, it will greatly accelerate progress in science and engineering. **This will make planning much easier today. I believe this will facilitate the acceleration of progress. This shows that we should dream bigger. You can imagine that if you were able to design a system, you could ask it to tell you how to make large amounts of clean energy at low cost, how to capture carbon efficiently, and guide you in building a plant that would do those things. If you can achieve these, then you can achieve in many other fields as well.

Wonderful ChatGPT

host:

Hearing that you hadn't thought ChatGPT would be so widespread, I wonder if there are any examples of other people being genuinely surprised by its value and capabilities.

Ilya:

I was so surprised and delighted when my parents told me how their friends use ChatGPT in their daily life. It's hard to pick one out of many endearing stories that showcase the brilliance of human creativity and how people harness this powerful tool.

It's been great for us in the field of education and seeing so many people write words that change their lives like this is really transformative for me because now I can learn anything, I learn specific things, or Saying things I didn't know how to do before, I do now.

Personally, seeing people learn in a new and better way and imagining what it will be like in a few years is very satisfying and beautiful. At this rate, we didn't fully expect this to happen, which is really amazing.

Then there is an interesting story, which I just heard yesterday. It is said that a man used to spend two hours every evening writing bedtime stories with his children. These stories are all children's favorite things, and it becomes a special moment. They had a great time every night.

Questions from the audience:

Sam: At a loss for words...

Ilya:

Regarding the question of open source and non-open source models, we don't have to think in binary black and white terms. It's like there exists a secret source that you can never rediscover.

**Maybe one day there will be an open source model that replicates the capabilities of GPT-4, but this process will take time, and by then, such a model may become a more powerful model within a large company. **Therefore, there will always be a gap between the open source model and the private model, and this gap may gradually increase. The amount of work, engineering and research required to make such a neural network will continue to increase.

So, **even if open source models exist, they will be made less and less by a small group of dedicated researchers and engineers, it will just be one company, one big company (contributing to open source). **

Himself:

I think this is a very fair and important question. The hardest part of our work is balancing the enormous potential offered by artificial intelligence with the serious risks associated with it. We need to take time to discuss why we face these risks and why they are in the first place.

I do think things look better when we look back at today's standard of living and our increased tolerance of humans. Compared with 500 or 1000 years ago, people's living conditions have improved a lot. We ask ourselves, can you imagine people living in extreme poverty? Can you imagine people suffering from diseases? Can you imagine a situation where everyone is not well educated? These are the realities of barbaric times.

**Although AI poses some risks, we also see its potential to improve our lives, advance scientific research, and solve global problems. **

We need to continue to develop AI in a responsible manner, with regulatory measures in place to ensure safety and ethical concerns are properly addressed. Our goal is to make artificial intelligence a tool for human progress, not a threat. This requires our joint efforts, including the participation of the technical community, government and all parties in society, to establish a sustainable and ethical framework for the development of artificial intelligence.

How to do it, I also think it's like unstoppable progress. Technology does not stand still, it continues to evolve. So, as a large company, we have to figure out how to manage the risks that go with that.

Part of the reason is that the risk, and the approach required to address it, is extraordinary. We had to create a framework different from traditional structures. We have a profit cap and I believe incentives are an important factor. If you design the right incentives, it can often lead to the behavior you want.

So we try to make sure that everything works well and doesn't make more or less profit. We don't have an incentive structure like a company like Facebook, which I think is brilliant, people at Facebook are in an incentive structure, but that structure has some challenges.

We try to accumulate experience by way of AGI. As Ilya often mentioned, we initially tried to experience AGI when we started the company and subsequently built the profit structure. Therefore, we need to strike a balance between computational resource requirements and focus on the mission. One of the topics we discuss is what kind of structure allows us to embrace regulation with enthusiasm, even when it hurts us the most.

Now is the time,** we are driving regulation globally that will have the biggest impact on us. **Of course we follow the rules, I think when people are at risk it's easier for them to behave well, it's easier for them to seek purpose. So I think the owners of these leading businesses are feeling it now, and you're going to see them react differently than the social media companies. I think all doubts and concerns are justified. We struggle with this problem every day, and there's no easy answer to it.

Ilya:

The gap between the models you mentioned is indeed a problem.

I mean, now we have GPT-4, you know we're training, you have access to GPT-4,** and we're really working on the next future model. **

Perhaps I can describe this gap in the following way: As we continue to build and improve AI models with enhanced capabilities, there is a larger gap that requires longer testing periods and time. We work with the team to understand the limitations of the model, and all the ways you know, use it as much as possible, but we also gradually extend the model.

For example, now GPT-4 has visual recognition capabilities, and you (the version you are using) have not launched this feature because the final work has not been completed. But soon we will achieve this. So I think that answers your question, maybe not too far in the future.

*Note: Roko's Basilisk is an allusion to an online discussion and thought experiment involving hypotheses about future superintelligence. *

*It is said that this superintelligence can obtain information through time travel or other means that people cause pain to it, and punish those who did not help achieve its birth. This allusion thus raises an ethical dilemma: whether the development of superintelligence should be supported and promoted now to avoid possible punishment in the future. *

Ilya:

While Rocco's Basilisk isn't something we're particularly concerned about, we're definitely very concerned about superintelligence.

Probably not everyone, not even everyone in the audience understands what we mean by superintelligence.

What we're referring to is the possibility of one day building a computer, a cluster of computers in the form of GPUs, that is smarter than anyone and capable of doing science and research faster than large teams of experienced scientists and engineers. engineering work.

It's insane, and it's going to have a huge impact.

It can design the next version of the AI system and build a very powerful AI. So our position is that **superintelligence has far-reaching effects, it can have very positive effects, but it is also very dangerous, and we need to be careful about it. **

This is where the IAEA (International Atomic Energy Agency) approach you mentioned comes in for very advanced cutting-edge systems and superintelligence in the future. We need to do a lot of research to harness the power of a superintelligence to match our expectations, for our benefit and for the benefit of humanity.

This is our position on superintelligence, which is the ultimate superintelligence challenge facing humanity.

Looking back at the evolutionary history of humans, a single-cell replicator appeared about 4 billion years ago. Then, over billions of years, a variety of different single-celled organisms emerged. About a billion years ago, multicellular life began to emerge. Hundreds of millions of years ago, reptiles appeared on the earth. Mammals appeared about 60 million years ago. About 1 million years ago, primates appeared, followed by the emergence of Homo sapiens. 10,000 years later, writing appeared. Then came the Agricultural Revolution, the Industrial Revolution and the Technological Revolution. Now, we finally have AGI, the final of superintelligence and our ultimate challenge.

Himself:

I think studying computer science is valuable anyway.

Although I hardly ever write code myself,** I consider studying computer science one of the best things I've ever done. It taught me how to think and solve problems, skills that are very useful in any field. **

Even if the job of a computer programmer looks different in 10 to 15 years from now, learning how to learn is one of the most important skills, including learning new things quickly, anticipating future trends, being adaptable and resilient, understanding others needs and how to be useful.

So there's no question that the nature of work will change, but I can't imagine a world where people don't take the time to do something that creates value for others and all the benefits that come with it. Maybe in the future, we'll care about who has the cooler galaxy, but certain good things (like value creation) won't change.

Ilya:

I mean, in the best possible way.